When a cadre of technologists gathered in late 2015 to establish OpenAI, they spoke in the hushed tones of priests confronting apocalypse. Artificial general intelligence, they warned, represented perhaps the gravest threat humanity had ever conjured. Their solution was elegantly paradoxical: build the dangerous thing themselves, but purely, nonprofit-edly, with mankind's interests held sacred above all.

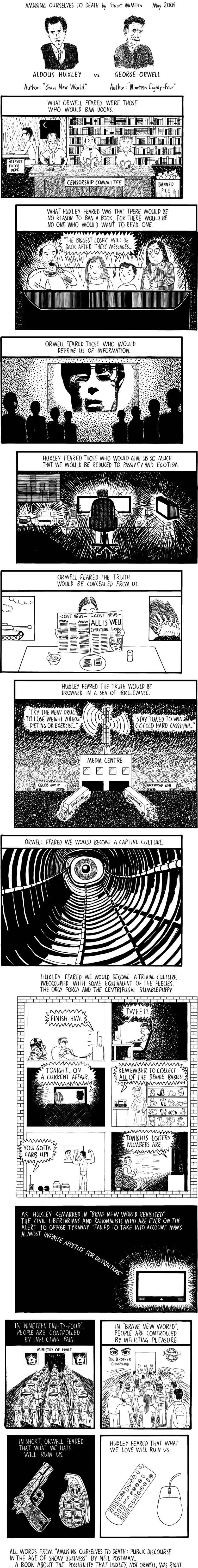

How quaint those founding documents now read. Like constitutional amendments penned by idealists and inherited by opportunists, OpenAI's original charter has become less lodestar than museum piece—admired occasionally, followed rarely, and increasingly inconvenient to those who must occasionally explain why the organisation founded to save humanity from AI has transformed into humanity's most aggressive purveyor of it.

The metamorphosis began in earnest around 2019, when the nonprofit spawned a "capped-profit" subsidiary with the linguistic dexterity that would make a tax lawyer weep with admiration. Microsoft subsequently poured billions into this curious hybrid, and the safety-first missionaries found themselves genuflecting before the same altar of growth that governs the less sanctimonious.

Yet what makes Open AI's journey particularly Dantesque are the darker accusations that have accumulating since then.

Most troubling are the circumstances surrounding the death of Suchir Balaji, a young whistleblower who had raised concerns about the company's practices. Mr Balaji, a former researcher, had spoken publicly about what he perceived as OpenAI's cavalier approach to copyright and training data. His death, ruled a suicide, has spawned theories that respectable publications dare not print but which circulate with uncomfortable persistence through the technology industry's whisper networks. The company has denied any wrongdoing. Yet the timing has proved impossible for critics to ignore—another data point in a pattern that some former employees describe as a culture where loyalty is demanded with something approaching religious fervour, and apostasy carries consequences both professional and personal.

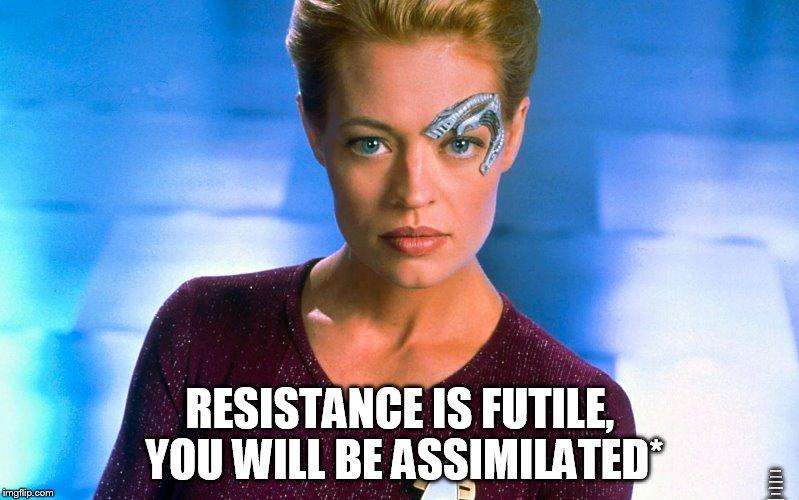

Then there is the matter of the board itself. When OpenAI announced that General Paul Nakasone, former director of the National Security Agency, would join its board of directors, the company framed the appointment as prudent counsel on security matters. Critics perceived something altogether different: the marriage of America's most powerful surveillance apparatus with its most capable artificial intelligence company. Even Orwell would find this to unsubtle for dystopic fiction.

Founder Sam Altman himself has become a figure of considerable controversy. His brief defenestration by the board in late 2023, followed by his triumphant restoration, revealed governance structures that appeared robust on paper but dissolved upon contact with commercial reality and employee pressure. Whatever concerns prompted the board's original action remain shrouded—another opacity in an organisation whose founding documents promised transparency.

What began as a temple has become a counting house. The priests have become merchants. And the prayers for humanity's safety have become press releases. The congregation, one suspects, should have read the terms of service more carefully.